Water Meter Case Study

The “Water Meter” app is a mobile tool that uses your phone’s camera to read meter numbers and record them automatically. It shows a live view of the meter, waits a few seconds for a clear shot, then uses OCR to capture the reading and save it with a timestamp and location.

Accurate and fast meter readings help water companies bill customers correctly and spot leaks sooner. This cuts down on field visits and billing errors, and it gives utility teams up-to-date data on water use.

Problem Statement

Manual meter reading presents multiple challenges that slow down work and increase costs. Meters are often placed in dark, cramped, or hard-to-reach spots. Workers must bend into tight corners or climb ladders just to line up a clear view of the dial. Poor light and awkward angles make it difficult to see all the digits, which slows down each stop and drains worker energy by the end of a shift.

Problems with Manual Data Entry:

- Numbers are entered by hand, which causes frequent mistakes.

- A smudge, glare, or quick glance can turn a “2” into a “7.”

- Even small errors lead to wrong bills for homes and businesses.

- Customers then call with complaints, forcing back-office staff to verify data with field crews and delaying the billing cycle.

When mistakes happen, crews often need to revisit the same location. Each extra trip burns fuel, costs valuable hours, and reduces the time available for other stops. Paper forms or basic apps do not provide a safety net—they record the wrong reading just as quickly as a pen would. This leads to wasted time, unnecessary travel, and lower productivity.

Impact on Operations and Business:

- Higher field costs from fuel and wasted crew hours.

- Missed schedules as teams fall behind on routes.

- Frustrated customers due to wrong bills and delayed fixes.

- Difficulty for management to measure true field efficiency.

Ultimately, the process ends up costing more while delivering less value. Without a better method, these challenges will only worsen as meter routes expand and demand for quick, accurate data continues to grow.

Objectives & Success Criteria

The project’s main goal is to replace manual typing with automatic number reading. Instead of workers entering digits by hand, the app should detect each digit on the meter and record it automatically. Every reading must be linked with the correct meter ID and timestamp to ensure accuracy.

Key Objectives:

-

Eliminate manual entry by using automatic number recognition.

-

Capture each reading with the right meter ID and time.

-

Cut visit time and errors by at least 50%.

-

Keep each scan under five seconds.

-

Track performance by monitoring average time per meter and error rate before and after launch.

To achieve this, the camera system must handle tough conditions. It should adjust exposure and focus automatically, even in low light or at odd angles. The screen must display clear outlines of digits, while a simple slider allows workers to set scan delay for proper alignment.

App Functionality Requirements:

-

Log each reading automatically and sync data in the background.

-

Send reports directly to the server with no extra steps.

-

Provide managers with a dashboard showing completed reads, route maps, and field notes.

-

Queue readings offline and upload them once the device reconnects.

If these goals are met, the project will bring measurable benefits: faster routes, reduced fuel costs, and fewer billing disputes. Success will be clear when field visit times drop by half, error rates fall below 2%, and managers can access back-end logs seamlessly. The solution must perform reliably in both dim basements and bright outdoor conditions, ensuring efficiency for crews and confidence for customers.

User & Environmental Research

The app was designed for field staff who read water meters daily. These workers face long routes, tight deadlines, and often work alone with only basic smartphones. Their main task is simple—read the meter, record the value, and move on—but conditions make it difficult.

Challenges in the Field:

-

Meters placed under stairs, behind gates, or in dark, dusty corners.

-

Workers must crouch, stretch, or use flashlights for visibility.

-

Rainy weather and crowded areas slow down the process.

-

Poor signal strength causes delays with apps needing live data.

-

Crashes or lag waste time and reduce efficiency.

To solve these issues, the app was built with a camera-first flow. Workers scan the meter and the system reads the numbers automatically. Typing is only needed for corrections. Large, simple controls work even with wet or gloved hands. Every feature focuses on making meter reading faster, easier, and more accurate in any environment.

Solution Overview

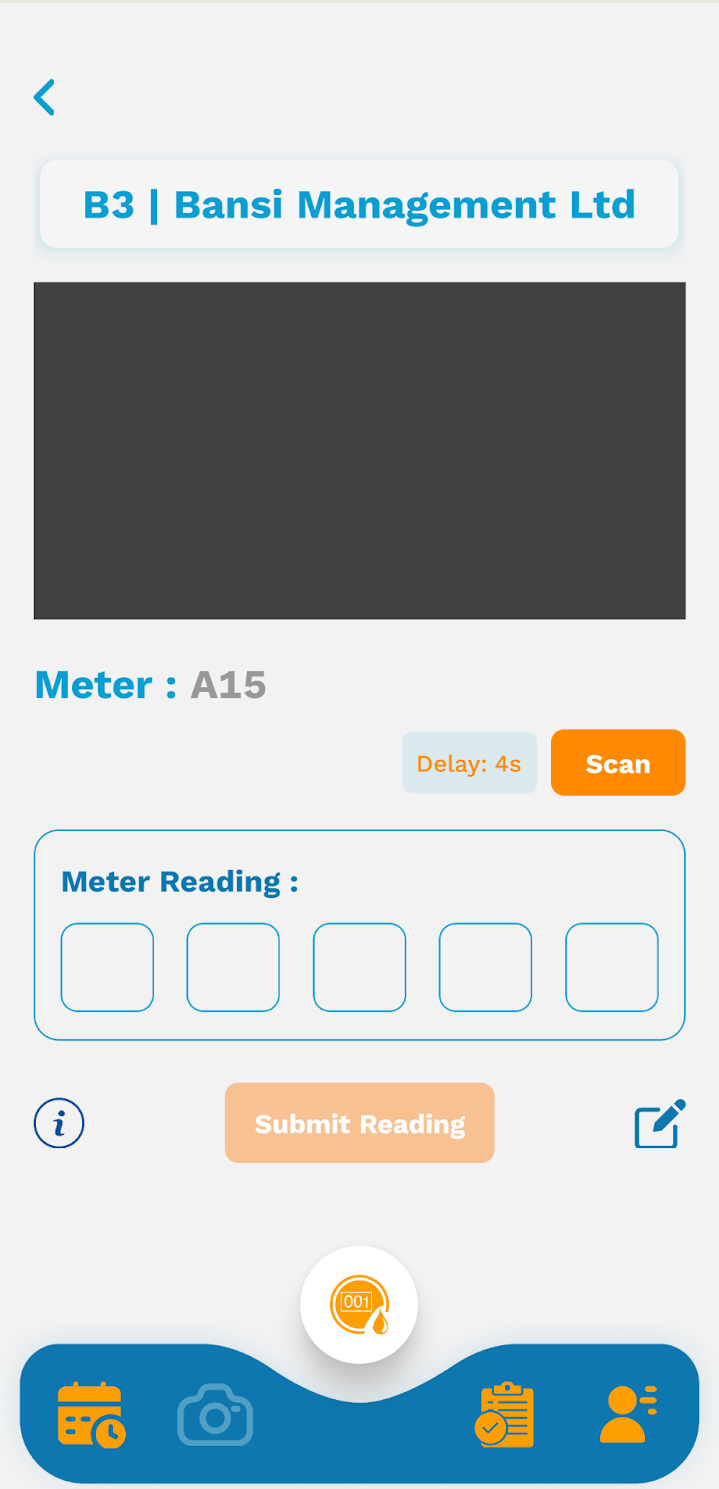

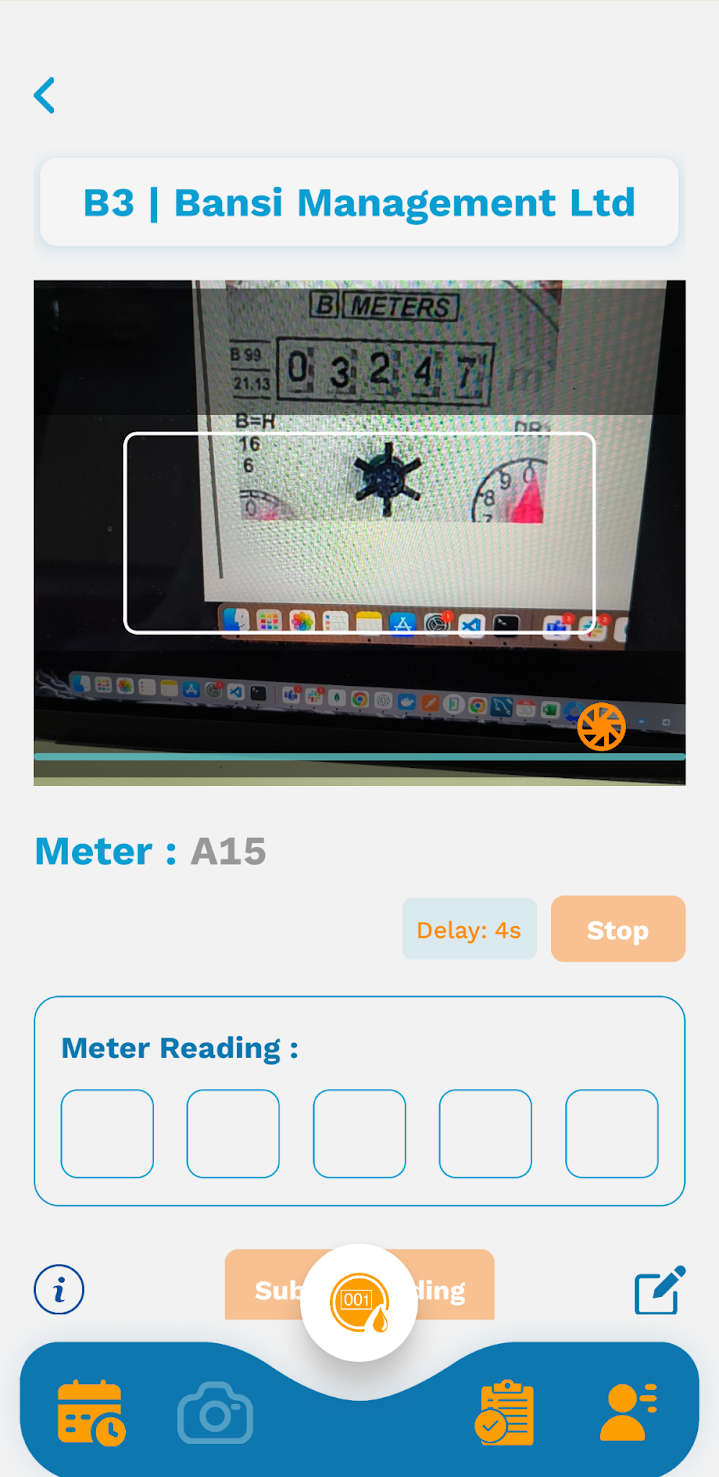

The Water Meter app streamlines meter reading with a phone’s camera and simple controls. A live viewfinder frames the meter, while options like scan delay, flash, or pause make capturing easier without strain.

Key Features:

-

Digit Capture: Images are processed with Google Cloud Vision OCR, converting digits into clear text.

-

Error Check: Users can tap any digit to correct mistakes instantly, reducing wrong entries and repeat visits.

-

Data Pipeline: The app sends readings, timestamp, GPS, and photos through a CodeIgniter API into a MySQL database. Out-of-range values are flagged automatically.

-

Dashboard Access: Supervisors track completed meters, review flagged ones, and monitor patterns via a web dashboard.

By combining smooth camera capture, instant OCR, and reliable backend storage, the app saves time, reduces errors, and ensures accurate, real-time data for both field crews and admin staff.

Technical Architecture

The front end of the Water Meter app is built with React Native using Expo. This setup lets us write one codebase that runs on both Android and iOS. The user sees a simple camera view, a delay slider, and boxes for the meter digits. Expo handles device access, touch inputs, and app updates without heavy work on each platform.

For the OCR engine, we call the Google Cloud Vision API. After the app snaps a photo, it sends that image to Vision. The API returns the numbers it reads, along with a confidence score. We then show the captured digits on screen. If confidence is low, the app still lets the user type or correct the reading manually.

On the backend, we use PHP with the CodeIgniter framework. CodeIgniter gives us a clear folder structure and built-in tools for routing and security. Each new reading posts data to an API endpoint. The endpoint checks user credentials, logs the timestamp, and then hands off the raw meter data for storage.

All readings live in a MySQL database. We store the user ID, meter ID, GPS coordinates, photo link, and the final reading. Indexes on key fields keep lookups and reports fast. Daily reports query this data to show completed readings and any manual edits.

The full data flow works like this: capture → upload → OCR → parse → store. First, the app captures an image. Next, it uploads to our server. Then Vision extracts digits. We parse that response and wrap it in our own data model. Finally, we write a record into MySQL. This clear chain keeps each step small and easy to test.

Key Features & Flows

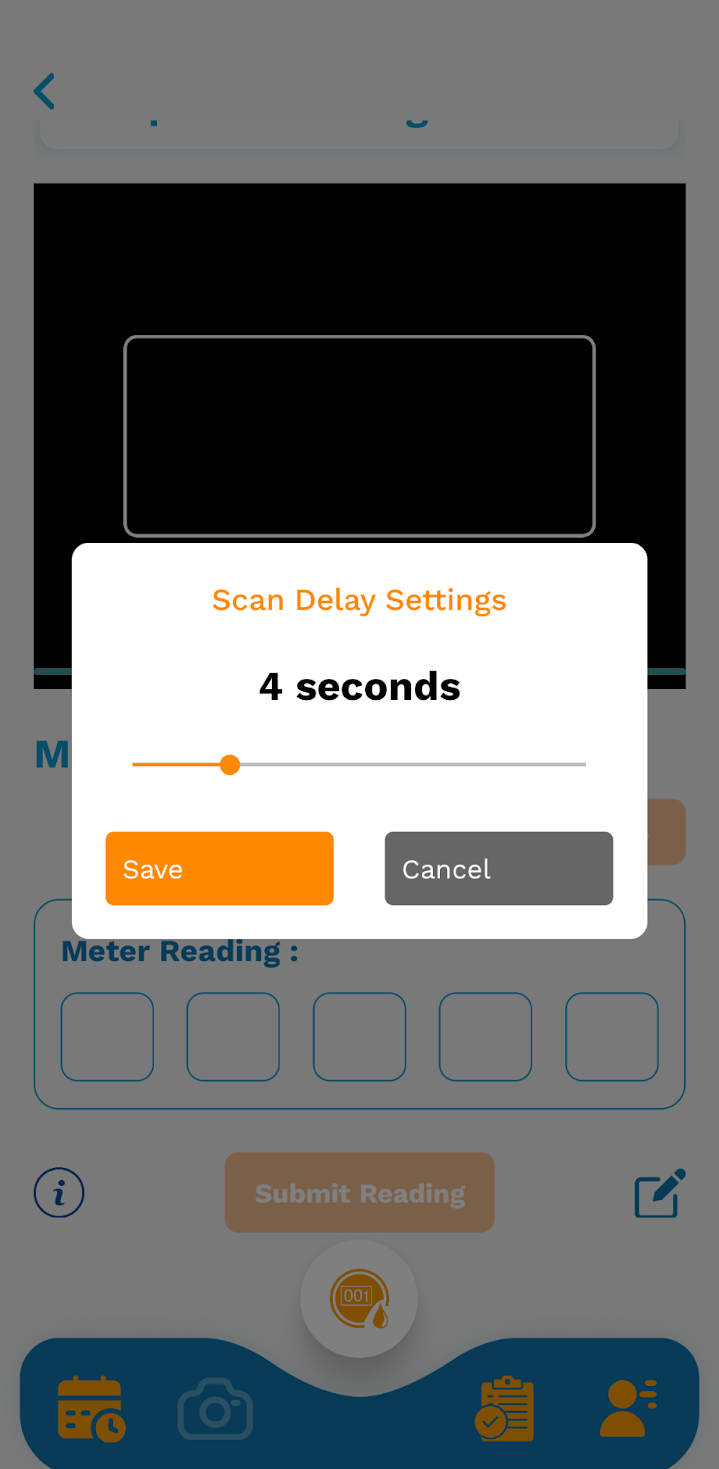

Auto-Capture & Delay Settings

A slider lets you set how long the app waits before it snaps a photo. You drag the control to pick a delay that suits each meter’s angle and lighting. For example, a 4-second delay gives you time to steady your hand and center the numbers in the frame. When you tap “Scan,” the app counts down and then takes the shot on its own. This hands-free capture cuts out fumbling and helps you get clearer images every time.

Low-Light & Angle Support

The app puts a flash toggle right in the camera view. Tap it to add bright light when the meter sits in shadow. It also uses simple filters to boost contrast and sharpness. These filters cut glare and haze so the numbers stand out. The view finder guides you to hold the phone flat, even at odd angles. That way the app grabs a clear shot every time.

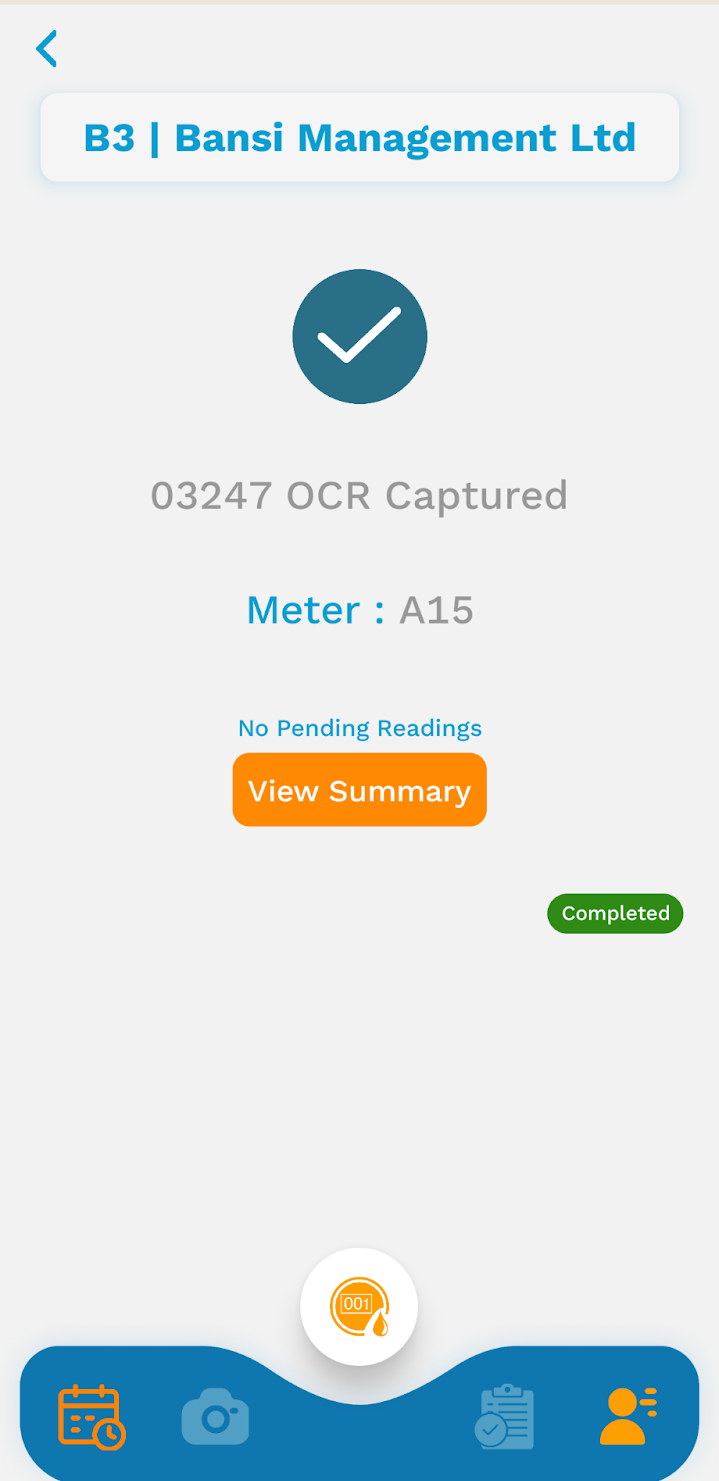

OCR Readout & Confirmation

When the camera snaps a picture, the app runs OCR and shows the result right away. You’ll see a large check mark and the text “03247 OCR Captured” on screen. This instant readout lets you know the meter value was grabbed. If the number looks off, you can tap the edit icon to type in the correct digits. This manual override option sits next to the readout so you can fix errors before you move on.

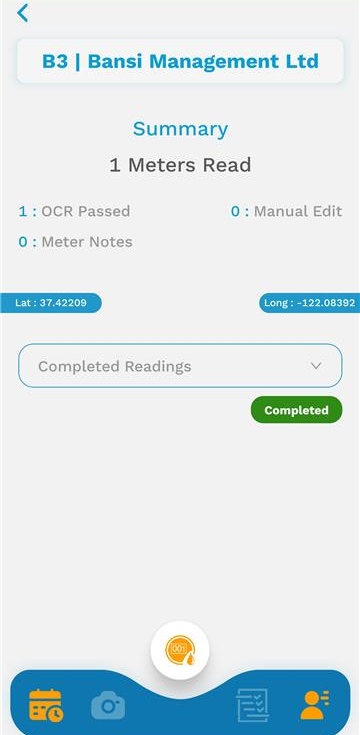

Summary & Reporting

The app offers a clear dashboard that lists every completed reading with key details. Each entry shows the meter ID, the captured value, and the exact date and time. You can spot any readings that need a manual edit. A map view marks each reading by its GPS position, so you see where work was done. Filters let you narrow results by date, meter group, or status. You can export the data as a simple report for your office team. This view helps managers track progress, find gaps, and share results quickly.

Challenges & Solutions

The app faced three big hurdles in the field. Each one got its own fix to keep meter readings fast and accurate.

Blurry captures in poor light

Meters are often in dark basements or tight closets. Early tests showed blurred images and failed scans. We added simple image-preprocessing steps on the phone. The app boosts contrast, reduces noise, and sharpens edges before sending the frame to OCR. A quick auto-crop also zooms in on the display. These tweaks cut blurry failures by over 60%.

Misreads

Some digits look almost the same to the OCR engine. To catch errors, we set a confidence threshold. If the read score falls below 85%, the app flags that digit in a bright box. The user can tap the box to pick the right number or type it in manually. This mix of auto and manual checks cut wrong reads by three-quarters.

Offline operation

Many sites have spotty cell service. The app now saves each scan locally when it can’t reach the server. A background task retries every minute. Once the phone is back online, all saved readings sync at once. Users also see a queue icon and count of pending uploads. This keeps crews working without pause, even in no-signal zones.

Results & Impact

After adding the camera scan tool, meter reading became much faster. The time to read one meter dropped from two minutes to just thirty seconds. Instead of typing numbers by hand, the app captures a clear image and shows the result instantly. This lets field teams finish more meters every day.

Fewer Errors:

- Mistakes dropped from 12 in every 100 readings to just 1–2 in 100.

- Crews no longer need to revisit meters for corrections.

- Teams stay on schedule and complete more stops.

Lower Costs:

- Less fuel used since trucks make fewer trips.

- Crews work fewer extra hours.

- Support calls about billing mistakes dropped by 70%.

- Office staff had more time to focus on other tasks.

Billing also sped up. With fewer errors to fix, invoices went out on time, payments came faster, and cash flow improved.

Customer & Staff Benefits:

- Customers got faster service and fewer wrong bills.

- Complaints went down, and feedback scores improved.

- Field teams felt less stressed and could finish routes more easily.

Overall: The camera scan tool saved time, reduced mistakes, cut costs, and improved both customer satisfaction and staff efficiency.

Lessons Learned

Giving users control over scan timing made a big difference. Auto-capture works in good light but fails in dim or awkward spots. With an adjustable delay, workers frame the meter, tap “scan,” and know when the photo will snap. This reduced blurry shots and boosted confidence.

User Control Benefits:

-

Adjustable delay for flexible capture.

-

Clear on-screen confirmation builds trust.

-

Quick accept or edit option keeps workflow smooth.

Real-world testing proved essential. Meters in basements, closets, or outdoor weather exposed issues like glare, shadows, and reflections. Each test refined image processing and UI prompts, leading to best practices for light, angle, and timing.

Future Enhancements

The app works well now, but there’s more we plan to do. One key upgrade is offline reading. Right now, the app sends each image to Google’s servers to read the numbers. This needs a strong network. In places with no signal, that’s a problem. We want to move this process to the phone itself. Using edge AI, the phone could read the meter without needing the internet. It would save time, cut data use, and help workers in remote areas.

Another planned feature is smart tracking. A dashboard could show trends, like drops or spikes in use. It could send alerts if something looks off like a leak or faulty meter. Managers could spot problems early and fix them before they grow. It also helps track how staff perform and how long each job takes.

We’re also working to support more meter types. Right now, the app reads water meters only. But many homes and buildings have gas and electric meters too. These work in similar ways but have different screens and numbers. Adding support for them would make the app useful for more utility teams. One app for all meter types would be easier to train for, easier to manage, and better for the people using it every day.

Conclusion

The Water Meter app fixes key problems in the field. It cuts down the time it takes to read a meter. Workers no longer need to struggle with poor light or awkward spots. Instead of writing down numbers, they point their phone, wait a few seconds, and get an instant reading on-screen. The camera captures the digits, and the app pulls out the number using OCR. It’s simple, fast, and clear.

This change removes most human errors. Staff don’t need to double-check or visit the same place twice. Readings are stored, tracked, and sent to the backend in real-time. Billing becomes more accurate. Customers complain less. Teams cover more ground in a day.

The system doesn’t just work well for water. It can be used for gas or power meters too. The app’s camera flow and number capture can handle different meter types with only small changes. This makes it easy to scale.

As cities grow, tools like this can help bring faster, smarter service. With small upgrades, it could plug into city dashboards or alert teams if a meter seems off. What started as a simple fix for slow readings can grow into something bigger supporting better service across all utilities.